Ask anyone about a DeFi token or NFT project, and their answer will probably include “DYOR” somewhere. Telling someone to Do Your Own Research is useful for absolving yourself of blame for their aping, but little else. Smart contracts are complex beasts. Fully auditing a protocol yourself before interacting with it is simply not practical for most users.

All is not lost, however. Even non-technical users, who don’t know how to code, can learn some of the most common hazardous patterns. Simple tools like Etherscan are growing increasingly powerful and friendlier to use. A quick scan through a protocol’s code and documentation may not protect you from some esoteric exploit, or a sophisticated scam, but it will probably save you 80% of the time.

Remember, 15 minutes could save you 15% or more on Nexus Mutual smart contract insurance. Or something like that.

Examining Smart Contracts For Dummies

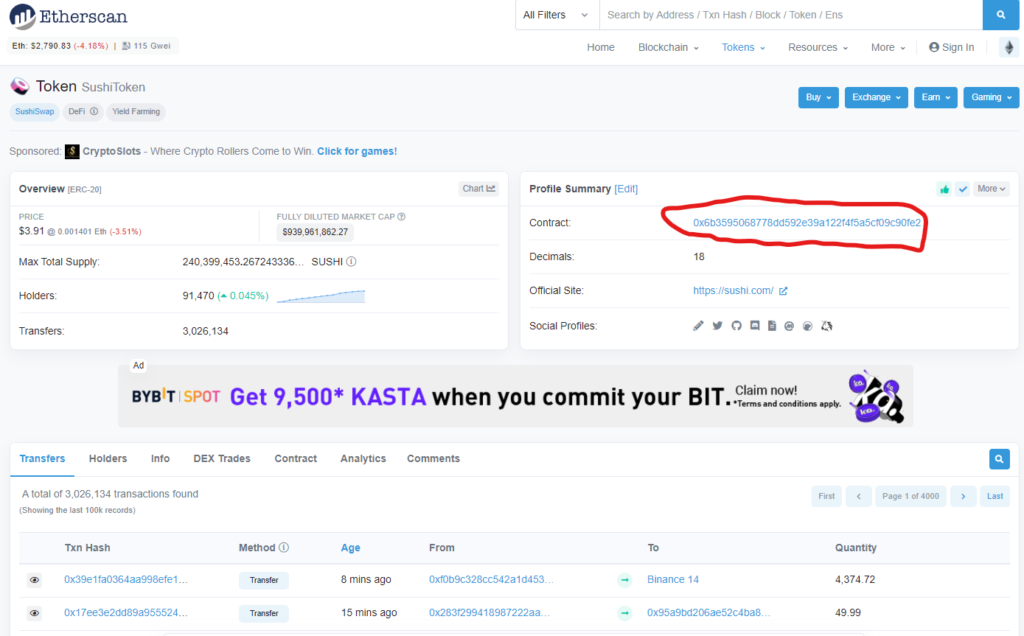

To start, check the token on Etherscan. If you search a token on Etherscan, you’ll land on the Token page, as below for the Sushiswap SUSHI token. The Contract field will take you from their summary to the actual smart contract page.

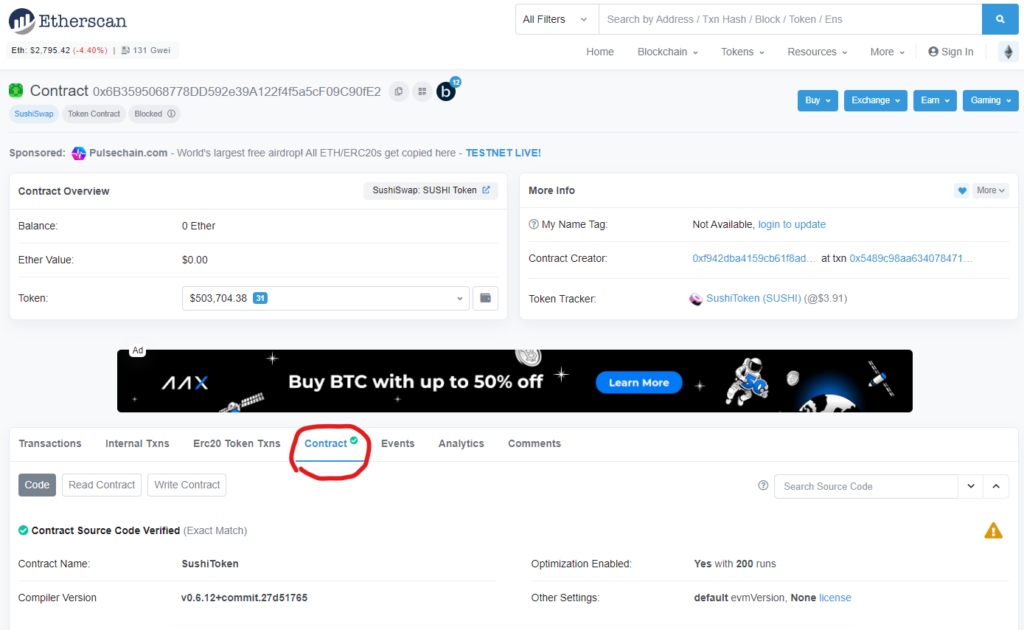

For an example, we’ll look at the Sushiswap SUSHI contract. This contains lots of useful information, but we’re primarily interested in the Contract tab below the overview information.

This will allow you to read the contract’s source code. If you’re not literate in Solidity (the programming language used by 90% of smart contracts), don’t worry! We’ll look at some key points you can watch out for even without knowing how to code.

Upgradeable Contracts

One of the key points about code uploaded to the blockchain is that it is to a certain extent immutable. Smart contracts cannot be arbitrarily reprogrammed once deployed. This ensures that protocols will keep working in a decentralized, censorship-resistant manner.

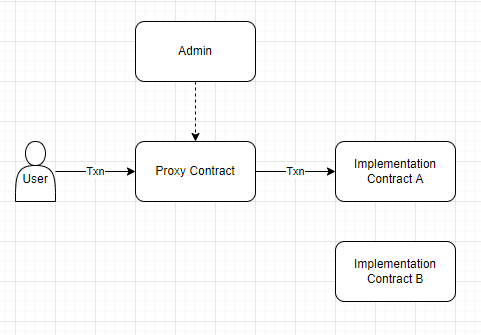

Not being able to change your code has certain downsides, though. What if there’s a bug? What if you want to add a new feature? You have to deploy a whole new contract, migrate over to it… it’s a huge mess. To combat this, some protocols elect to use Upgradeable Proxies.

In simple terms, all this means is that the user always interacts with the same contract, which contains all the state, like token balances. All their transactions to this proxy contract are forwarded to an implementation contract which contains the protocol’s actual code. Critically, there is an admin who is able to change the implementation contract.

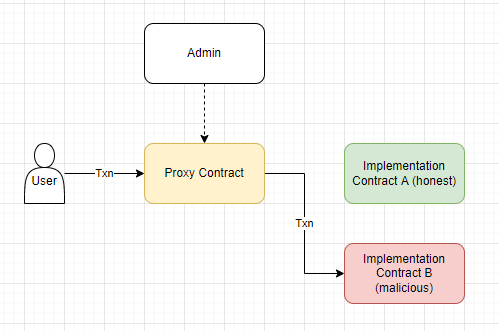

This represents a security risk for one simple reason: the admin can change the implementation contract at will, without the user knowing. Imagine going to start your car in the morning, only to find that your ignition switch has been redirected down a different set of wires to an explosive.

The security of upgradeable contracts relies entirely on who holds the admin rights to the proxy. Ideally, the admin should be a timelocked multisig administered by people you trust. This means that you can’t be rugged on the whims of one person, and even if they try to change it to a malicious implementation, you have a window to get out before the transaction executes.

How To Tell If a Contract Is Upgradeable

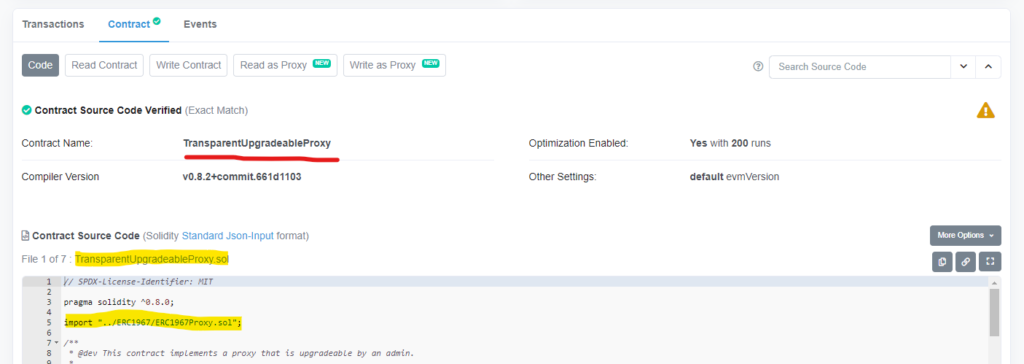

Let’s look at a simple example. I have no idea what this contract is pointing to and it’s on a testnet, but it was the first proxy I found, and it will serve our purposes. Don’t ape, that is financial advice. Here we have several key pieces of evidence to make us sure this is an upgradeable contract:

- Etherscan flagged it as a proxy and provides read/write as proxy options to us

- Language is used in the code such as “Upgradeable”, “Proxy”, “Delegate”, “delegatecall”, or “Implementation”.

- ERC-1967 is the standard interface for the most common proxy pattern

- This exact contract is the OpenZeppelin standard implementation of an ERC-1967 proxy pattern

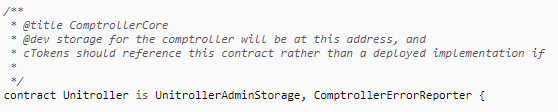

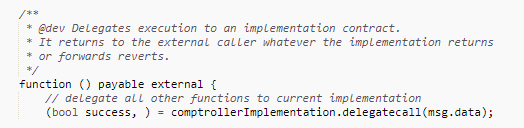

A more complex example is the Compound Unitroller contract. This contract is the proxy for their Comptroller contract, which governs their lending and borrowing ecosystem.

This one is harder to tell that it’s a proxy as they don’t use a standard proxy pattern but implement a custom one. Additionally their code file is “flattened” which means we have to scroll past aaaaaall the code they imported to find the main code. Way down at the bottom at line 2463, we find the Unitroller contract itself.

Not Using Multisigs

A multi-signature wallet contract, or multisig for short, is a contract that allows an extra layer of security for critical applications. A multisig will only execute transactions if a threshold amount of its signers provide a cryptographically-verified approval of that transaction. Just like nuclear missiles need two keys to be turned simultaneously, so one person can’t launch them on a whim.

Any serious protocol will use multisigs for all critical permissioned accounts. This means treasuries, marketing/community funds, addresses with administrative rights over the protocols, addresses who are allowed to mint tokens, blacklist users, pause the protocol, etc.

If a multisig is not used, you can potentially be rugged on the whims of a single person, or by a single private key being compromised.

Note also that just because a high-threshold multisig is used (ex. requires 9/10 users to execute), security is not guaranteed. Unless the addresses that sit as signers are known to you, there may be multiple wallets controlled by a single person (aka. Sybil attack). Insist on knowing who controls which addresses.

If a team tries to explain away the need for a multisig, run far, far away. Multisig contracts like the industry-standard Gnosis Safe are some of the most battle-tested pieces of code on the blockchain. There is no legitimate reason not to use them for all funds-handling and permissioned applications. You can, and should, inquire about the multisigs of all your projects, and their signers.

What You Can Do

Read the protocol documentation, multisigs are sometimes listed there. If not, ask the team on Discord/Twitter/Telegram/etc. If it takes too much detective work to find the info, that may be a red flag. Insist on knowing who the signers are.

Pro tip: If the multisig is a Gnosis Safe instance (many are), you can load the address into the Gnosis Safe App and easily spy on all the wallet’s juicy details, like transaction history, who approved/executed each transaction, and any pending transactions.

Token Approvals

This is not necessarily a hazardous pattern by itself. Approvals are part of all token standards, and are a critical part of DeFi. They are, however, extremely misunderstood, and can be very dangerous when combined with hazardous patterns.

When you approve an address for X amount of tokens, that address can do whatever it wants with X amount of your tokens. At any time, without any interaction from you. It can spend those tokens just like they are its own money. I cannot stress this enough.

Approving an address to spend your tokens should be taken with the same seriousness as sending the tokens to that address.

This is especially dangerous when combined with the fact that, to save gas fees and improve user experience, most protocol front ends will default to having you grant unlimited approval. This is VERY poorly understood. The end result is that when you go to the SafeDogeMoonFarm website and think you’re approving it to spend the 200 DAI you plan to ape into it, you are usually approving it to spend all the DAI in your wallet, and that ever comes in to your wallet.

I’ll say it again. An unlimited token approval means the approved address has access to every single cent of that token, now and forever, unless you deliberately revoke its access.

Imagine paying for your groceries by giving Walmart the account and routing number for your bank account.

This is especially dangerous with upgradeable contracts, as the owner can arbitrarily replace the application logic with something that simply transfers every token it has approval for to their personal wallet. Use extreme caution when approving upgradeable contracts.

What You Can Do

The key defense here is to practice good wallet management and good token approval hygiene. Don’t keep all your funds in one wallet. If you don’t plan on repeated use of a protocol, don’t approve it for more than you plan to transfer. Revoke your approval for protocols you no longer use.

Etherscan has a handy tool for revoking approvals, as does Revoke.cash, or you can do it via the contract itself. (Read: How to manually revoke token approvals without Etherscan)

And for God’s sake, don’t give unlimited approval to random projects.

Easily-changed critical variables

Many contracts allow changing critical variables such as which addresses have admin permissions, where user funds go on an emergency withdrawal, the fees the protocol extracts, etc. This can be done for a variety of legitimate reasons, including compatibility with future plans for DAO governance or architectural upgrades.

If access to these critical variables are not tightly controlled both on and off-chain, you may be at risk. We can imagine the following scenario:

- Hacker compromises a permissioned developer wallet

- Hacker uses that wallet’s credentials to set his own address as protocol administrator

- Hacker uses his new status as admin to emergency withdraw all the protocol funds to the admin account – which is now his back pocket

This circles back to the use of well-administered multisigs for critical permissioned roles. This is a crucial layer of protection.

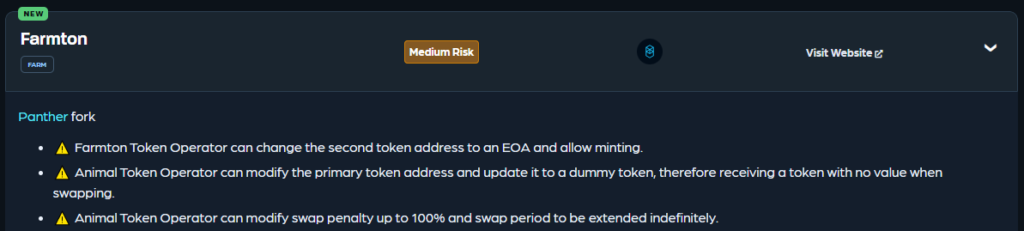

Let’s look at this snippet from RugDoc for some practical examples.

Here we can see that the RugDoc review of this micro-cap project shows that critical parameters like the underlying token addresses can be modified. Additionally, they can update a fee parameter to make the protocol just… take all your money when you swap and give you nothing back.

What You Can Do

If a protocol has the ability to update key parameters, they should only be accessible from a timelocked multisig. This gives a measure of protection against single malicious actors, and gives you the ability to review key transactions and get out safely if something malicious is coming.

You should also conduct your own review to see if anything critical can be changed. Key things to look for, again, are admin addresses, permission granting, blacklisting/whitelisting, setting underlying token or router address, minting, and setting fees, to name a few.

What To Look For

Finding out if critical parameters can be updated requires slightly more Solidity literacy, especially if the developers are trying to be sneaky about it. The snippet below may give you some idea of what to look for.

contract SafeContract1 {

// Permissoned address with admin rights

address public admin;

// The constructor cannot be called after the contract is deployed.

// This code will only ever be called a single time.

constructor(address initialAdmin) {

// Set the admin address

admin = initialAdmin;

}

// This is safe as long as the admin variable is not set anywhere else in the code

}

contract SafeContract2 {

// Permissoned address with admin rights

address public admin = '0xabc123...69420';

// This is safe as long as the admin variable is not set anywhere else in the code

}

contract SafeContract3 {

// Presence of the 'constant' keyword means this cannot be modified later

address public constant admin = '0xabc123...69420';

// This is also safe from later modification due to the 'immutable' keyword

address public immutable treasury = '0x69420...abc123';

}

contract UnsafeContract1 {

// Permissoned address with admin rights

address public admin;

// This function can be called at any point.

// Even if there is access control on it, the possibility of this critical address being modified exists.

function setAdmin(address newAdmin) public {

admin = newAdmin;

}

}Accessible Sensitive Functions

Quick Solidity lesson here. The language requires you to set a modifier called visibility on each function which determines who can call it. They are, as follows,

- Public – anyone can call this function

- External – anyone who is not this contract can call this function

- Internal – may only be called by this contract or a contract that inherits it

- Private – may only be called by this contract

For the purposes of basic security checks, you mainly need to care about the groupings of Public+External (exposed functions) and Internal+Private (unexposed).

Any function which is exposed can be called at will by external actors. Depending on the function there may be further checks that prevent unauthorized access, but there is nothing stopping anyone on this planet from poking that function with a stick. Each exposed function is an entry point that can become an attack vector if there is a bug in the underlying code.

Unexposed functions can only be called by the smart contract itself, ie. they will be triggered by calls within the code of exposed functions. For example, a call to deposit assets into an LP pool will trigger several bits of code, including an internal function call to mint LP tokens to your address. This means they are subject to the checks built into the code (ex. the protocol won’t mint you LP tokens if you don’t provide it assets).

The takeways here: One, if sensitive functions like minting tokens are exposed, they should be behind good access controls, timelocks, and multisigs or governance. You don’t want the only thing between you and infinite token supply to be an onlyAdmin check on a mint function.

Two, if a function is exposed without good reason, it’s a potential red flag for the protocol. Either the developers were careless, the protocol ecosystem is not well-conceived, or malicious activity may be afoot. Honestly, there are not a lot of legitimate reasons to have exposed mint functions.

What You Can Do

Take a basic look at the code. Well-designed smart contracts should have clear ways that you are intended to interact with the contract. If it looks like you probably shouldn’t be able to call something, that can be cause for concern.

As an analogy, if you go to a McDonald’s, there are accepted ways to interact with the restaurant. Go to the counter, order food, eat food, refill drink, etc. You should not be allowed to go into the office, or walk into the kitchen and drop a basket of fries. You definitely shouldn’t be allowed to open the cash registers.

Wrapping Up

If you’re not a sophisticated Solidity coder, you do have to rely on audit firms and security professionals to catch complex issues. I’ve also written a ranking of the best smart contract auditors to help you know which firms to trust.

Most smart contract users won’t become smart contract auditors – and you shouldn’t have to. A little due diligence will go a long way towards keeping you safe. Yes, it may be boring to dig through Etherscan for a few minutes instead of aping and being done with it, but it could save your bags.

The transparency and decentralization of blockchain technology gives you the opportunity to see behind the curtain in ways that are just not possible in the traditional world. You have the opportunity to check a protocol’s business logic for yourself instead of trusting them blindly. Take advantage of that.